The ethical use of AI-powered tools for the education and learning of adults

Artificial Intelligence (AI) is now a pervasive phenomenon. Almost all generations use multiple AI-powered tools in everyday life, for study, at work and in their leisure time; hence, they are expected to “collaborate and cooperate” with AI across multiple contexts (Laupichler et al., 2022, p. 2). Meanwhile, organisations from the public and private sectors are implementing or experimenting with various applications of AI-powered tools to support work processes, service delivery and the continuing education of employees. Here, public and private educational providers are no exceptions, given that AI-powered tools such as intelligent tutoring systems or language learning apps, to mention a few, can support programme development and teaching and learning practices.

AI-powered tools in support of the education and learning of adults

Various AI-powered tools can be used in the education and learning of adults in educational institutions, at work, and in self-directed learning activities. Among such tools, conversational agents are now common. Also known as chatbots, they are software that can mimic human conversations through text or voice interaction. Chatbots are usually built on machine learning or algorithms applied to input data that make digital devices learn and improve on a specific task, thanks to identifying patterns and predictions. More advanced chatbots, like ChatGPT (Generative Pre-trained Transformer), DeepSeek or similar, also known as generative AI, can process large amounts of input data, learn from it through algorithmic patterns, and finally generate new data or outputs in the form of text, images, or music (Labadze et al., 2023).

Both educators and learners can use chatbots and incredibly generative AI-powered tools to search for and synthesise information, produce teaching materials (educators) or study synthesis (learners) and produce novel texts, images or music. Such activities can help educators and learners in many ways, yet some are more ethically sensitive than others.

A more instrumental usage, like summarising a text, is less problematic (yet the user should be aware that the summaries produced by AI-powered tools may not necessarily capture the essence of a text as s/he would have done). However, more significant usages, like accessing information or producing a novel text or image, are more ethically charged. Engaging in a conversation with a chatbot requires the user’s capacity to input the proper prompts to generate satisfactory results. This can stimulate the user's critical thinking or problem-solving skills. However, such conversations also call for the user’s capacity to critically evaluate the validity of the information retrieved and the source of such information, which is not always known to the user. Additionally, it requires intellectual honesty to avoid a user claiming an output produced by an AI-powered tool as his/her original creation.

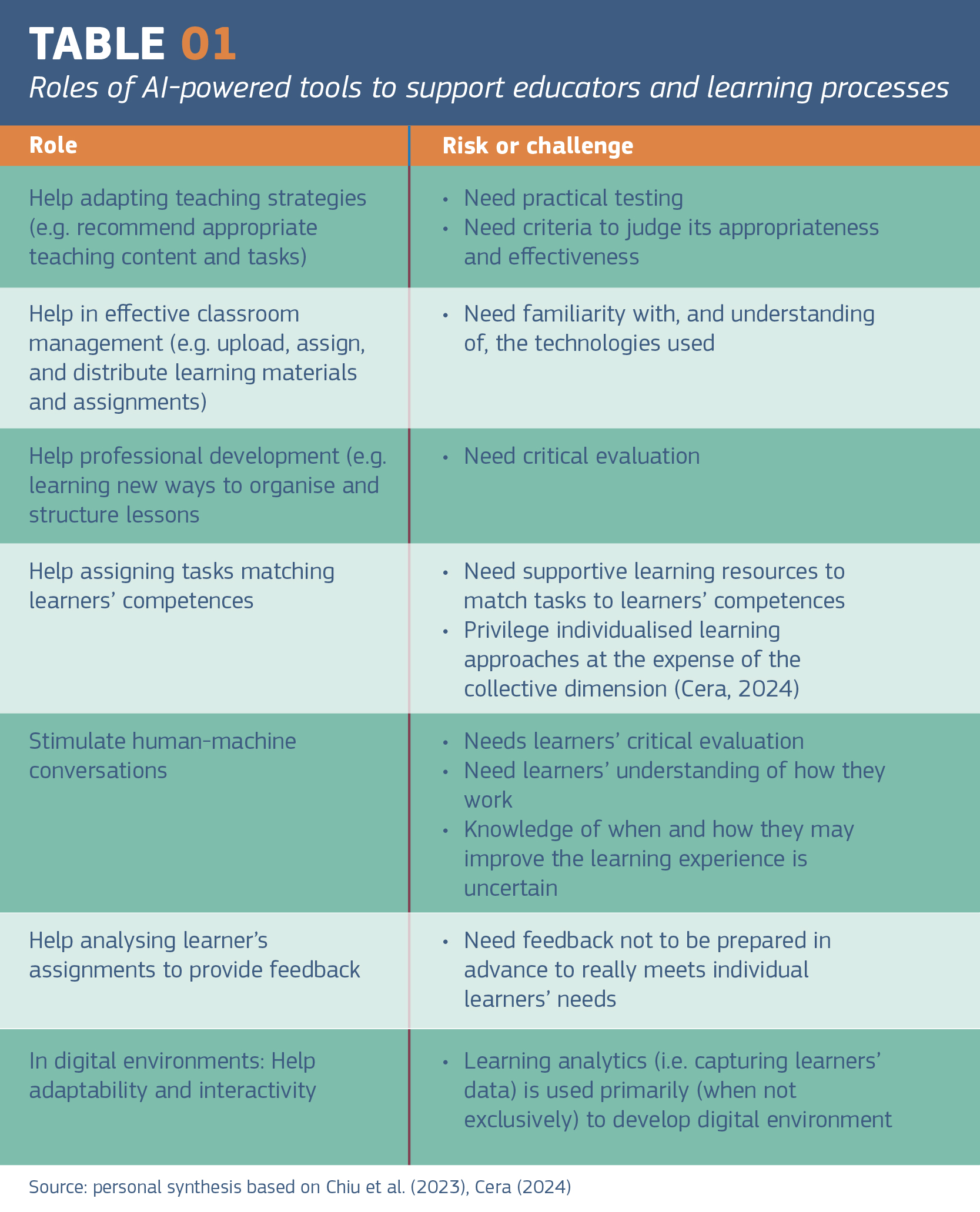

Researchers have identified different roles for AI-powered tools to support educators and/or learning processes (Chiu et al., 2023), which also present ethical risks or challenges to produce significant learning (see Table 1).

Independently from the philosophical, psychological or moral concerns that organisations and people may have in the use of AI-powered tools, there are inescapable ethical dimensions that educational providers should consider when developing their provision. Equally important is for educators and learners to be aware of the ethical dimensions of using AI-powered tools when engaging in teaching and learning practices (Mouta et al., 2023).

The AI Act: human at the centre

Ethical concerns are at the heart of the AI Act, the European legal framework approved by the European Parliament and the Council to regulate AI’s effective and safe use. Adopting an anthropocentric approach, the AI Act requires that AI’s outputs always be under human control and supervision and that technologies do not affect fundamental rights (see Charter of Fundamental Rights of the European Union). Accordingly, the AI Act identifies those AI systems that pose significant risks of harm to people's health, safety or fundamental rights. In education, high-risk AI systems include those used to control access or admission to educational institutions, evaluate learning outcomes and steer the learning process, and assess the academic level achieved or that people can access (Annex III of the AI Act). For this reason, the AI Act foresees that

AI literacy should equip providers, deployers and affected persons with the necessary notions to make informed decisions regarding AI systems. (AI Act, para. 20)

AI literacy or AI capabilities?

AI literacy can mean somewhat different things. One possible definition refers to

a set of competencies that enables individuals to critically evaluate AI technologies, communicate and collaborate effectively with AI, and use AI as a tool online, at home, and in the workplace (Long & Magerko, 2020, p.2).

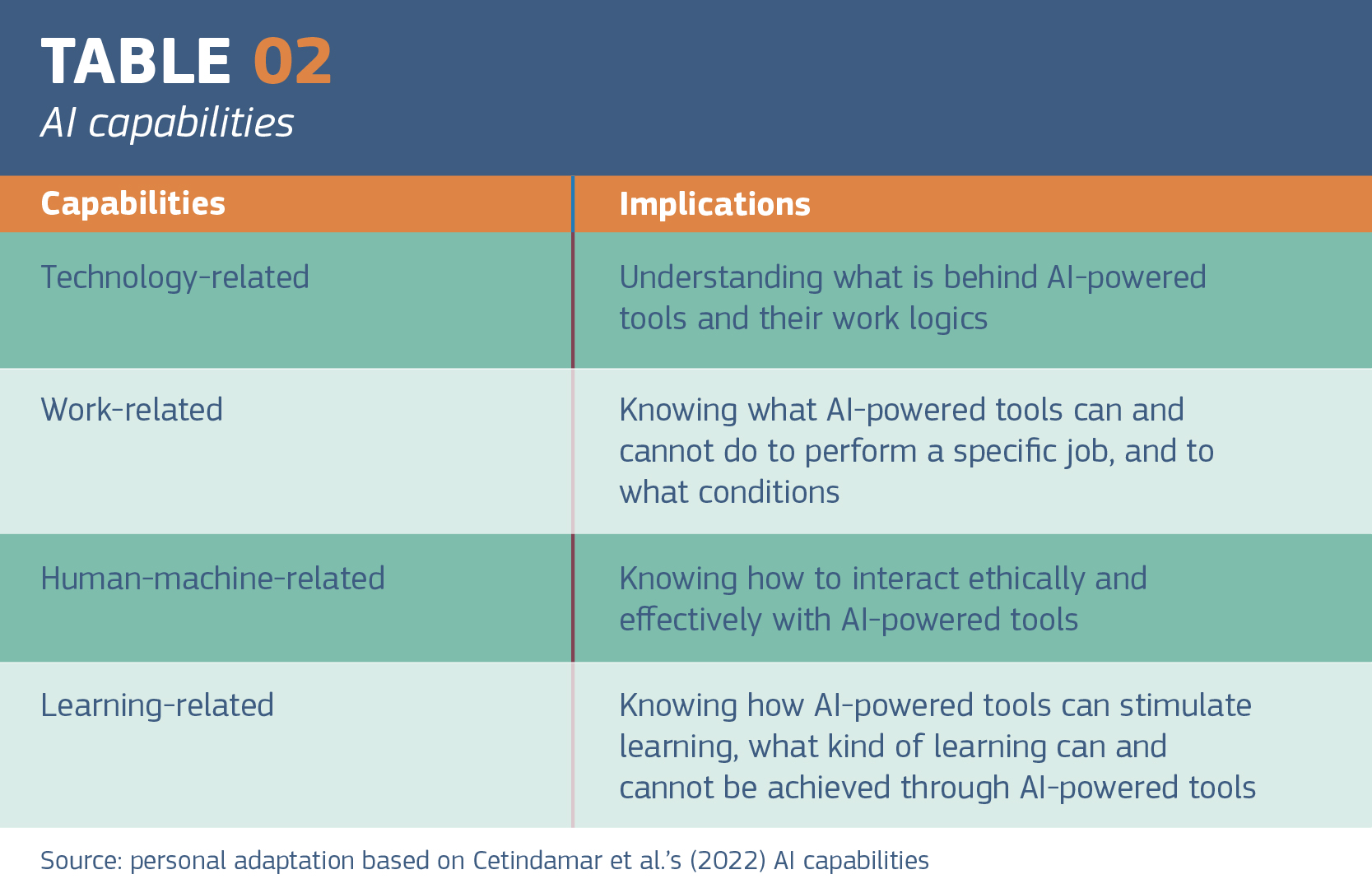

Understood this way, AI literacy encompasses specific knowledge and the capacity to understand, use, apply, and evaluate the ethical dimension in acquiring and creating new knowledge through AI-powered tools, which is not limited to expertise directly related to AI-powered tools. Thus, speaking of AI capabilities rather than AI literacy would be more appropriate to secure an ethical use of AI-powered tools in various contexts, including in educational settings and at the workplace (Cetindamar et al., 2022) (see Table 2).

AI capabilities and codes of conduct

To advance its anthropocentric approach and ensure that technologies do not affect people’s fundamental rights, the AI Act also foresees an active engagement of the European Commission and the Member States in ensuring that voluntary codes of conduct are developed in cooperation with relevant stakeholders.

Although different handbooks or guidelines on the use of AI-powered tools aimed at educators and learners have been produced over the years, like Generative AI in Student-Directed Projects: Advice and Inspiration (described here), developing codes of conduct is crucial for educational providers to support an ethically sound practice in using AI-powered tools by educators and learning while advancing their AI capabilities.

References

Cera, R. (2024), Intelligenza artificiale ed educazione continua democratica degli adulti: personalizzazione, pluralismo e inclusione [Artificial intelligence and democratic adult continuing education: personalisation, pluralism and inclusion], Lifelong Lifewide Learning, 22(45), 117-129 https://doi.org/10.19241/lll.v22i45.894

Chiu, T., Xia, Q., Zhou, X., Chai, C. S., & Cheng, M. (2023). Systematic literature review on opportunities, challenges, and future research recommendations of artificial intelligence in education. Computers and Education: Artificial Intelligence, 4, 100118.

Labadze, L., Grigolia, M., & Machaidze, L. (2023). Role of AI chatbots in education: Systematic literature review. International Journal of Educational Technology in Higher Education, 20, 56.

Laupichler, M. C., Aster, A., Schirch, J., & Raupach, T. (2022). Artificial intelligence literacy in higher and adult education: A scoping literature review. Computers and Education: Artificial Intelligence, 3, 100101. https://doi.org/ 10.1016/j.caeai.2022.100101

Long, D., & Magerko, B. (2020). What is AI literacy? Competencies and design considerations. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ‘20), New York, NY, USA (pp. 1–16). Association for Computing Machinery.

Mouta, A., Pinto-Llorente, A. M., & Torrecilla-Sánchez, E. M. (2023). Uncovering blind spots in education ethics: Insights from a systematic literature review on artificial intelligence in education. International Journal of Artificial Intelligence in Education, 1–40.

Bio

Marcella Milana is an Associate Professor at the University of Verona and an Honorary Professor of Adult Education at the University of Nottingham. She specialises in adult education and has researched and written extensively on adult education governance, policies and practices. She is also chair of the European Society for the Education of Adults (ESREA), editor-in-chief of the International Journal of Lifelong Education, and an EPALE Adult Learning Expert.

Comments

Rokes od stakeholders in developing codes of conduct

I do agree with the post-provider that developing codes of conduct is crucial for educational providers to support an ethically sound practice in using AI-powered tools by educators and learning while advancing their AI capabilities.

However, there are also questions upon which stakeholders could be involved in developing voluntary codes of conduct since there are diverse interests upon the use of AI, therefore, it nees a certain level of self-limitation in using AI to provide enough freedom not to over- / under-regulate this matter. Namely, an immensely accurate development of codes are necessary to make it real and human-oriented.

Rapid developments remain a challenge

A timely and important discussion. As AI tools become more common in education, it’s essential to ensure they are used ethically and responsibly, especially in adult learning contexts. One of the key challenges I see is that educators especially in adult education and the education system as a whole are struggling to keep pace with rapid developments in AI and AI tools. These changes are also affecting adult learners and other students. While AI continues to advance quickly, many individuals are still struggling with basic digital literacy skills.

Thank you for this…

Thank you for this thoughtful and timely article, Marcella! Ethical use of AI in adult education is a crucial topic, especially as AI tools become increasingly integrated into teaching and learning. I fully agree that educators need not only technical skills but also critical thinking, intellectual honesty, and awareness of risks and rights when working with AI.

For educators and trainers who wish to develop their AI skills and explore these ethical and pedagogical aspects together with colleagues from across Europe, we warmly invite you to our Erasmus+ training course “AI Tools for Meaningful Learning and Training”. The course offers hands-on experience with AI tools, discussions on ethical dilemmas, and opportunities to build your own AI toolbox for different stages of the learning process.

A few places are still available for the upcoming course in Malta!