AI in Guidance – Curse or Blessing?

Author: Marc Schreiber

Use of chatbots in guidance

The use of AI algorithms and in particular chatbots in guidance is an increasingly relevant topic. AI algorithms and chatbots can support tasks such as writing motivation letters or matching. Matching algorithms are based on Machine Learning (ML) and chatbots like ChatGPT are based on Generative AI. Chatbots generate content such as text or image, and in the case of text, they always predict the next word. They are trained to simulate human communication and thus enable interaction. However, there are challenges such as data protection and transferability to the individual reality of a person. Therefore, human interaction, taking into account the individual context in the guidance context, cannot be covered by chatbots. Individual processes such as the evaluation and, if necessary, interpretation of questionnaires can be automated and facilitated by chatbots, but both analytical ability (thinking) and intuition (feeling) of the counsellor are still required.

How does a "human" work?

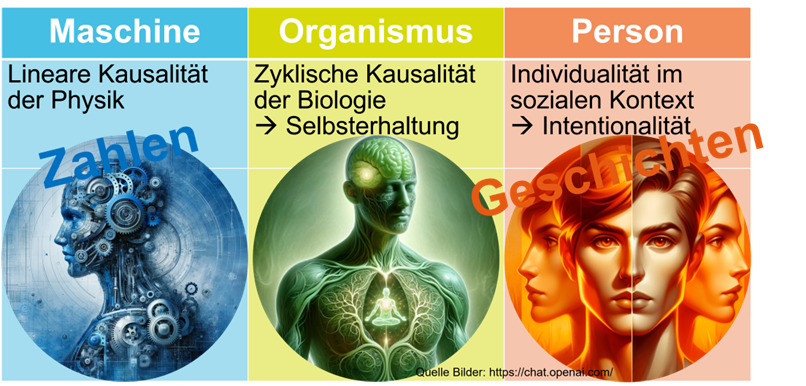

the "human" is a complex being whose functioning can be understood on several levels. Within psychology, a distinction can be made between three human images (Duke, 2022; see also Gloor & Schreiber, 2023):

In the human image of human as a machine, human is understood analogously to a machine. This works according to physical laws and has no internal impeller. In the image of human as an organism, it is assumed that human as a biological being strives for self-preservation and therefore follows an inner impeller. This results in complex interactions between people (cyclical causalities). In the image of human as a person, it is assumed that each person uniquely ‘works’ depending on the social context in which he is located.

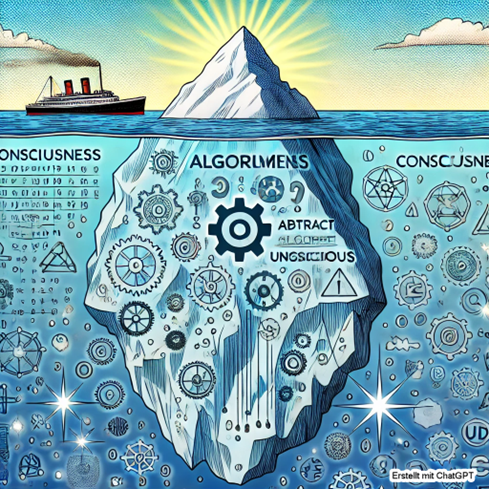

Psychologically, human behavior is influenced by thought and feeling processes that can be divided into explicit and implicit areas. Models such as Kuhl's PSI theory (Kuhl, 2001, 2018; see also Schreiber, 2022) make it clear that humans switch between analytical-sequential thinking and intuitive-parallel feeling. Analytical-sequential thinking takes place explicitly and intuitive-parallel feeling takes place implicitly and unconsciously for a long time:

The iceberg model provides a vivid illustration of how conscious and unconscious processes influence action and experience. While the visible tip of the iceberg represents conscious cognitive processes (the explicit), the larger invisible part represents implicit and intuitive processes (the implicit):

How does the world of work "work"?

The world of work has changed fundamentally due to the various industrial revolutions and also due to digitalization:

Application processes, for example, have been switched from manual procedures to automated platforms. Work 4.0 also involves the use of traditional AI to evaluate applicants on the basis of predefined criteria and to make a pre-selection. Machine learning (ML) algorithms can be used to analyze data to identify patterns and make predictions. In addition, new content can be created with the help of Generative AI, as explained above using the example of chatbots.

How does AI "work"?

Artificial intelligence is based on algorithms and the processing of large amounts of data. ‘Weak’ AI solves specific tasks, such as the recognition of patterns or the analysis of textual data. ‘Strong’ AI, on the other hand, seeks to fully replicate human cognitive abilities. "Strong" AI is not yet achieved and it must also be questioned whether this will ever be the case. Generative AI, as found in chatbots, creates new content by predicting the next most likely step in a sequence. But it remains opaque in many areas. It represents a black box whose processes are not comprehensible. In addition, AI models reflect the values and prejudices of their developers, which raises ethical questions. In order to use AI responsibly, these aspects must be taken into account in the application. AI does not ‘understand’. Nevertheless, the application of AI algorithms suggests that this is the case, as shown in the following figure:

Potential hazards

The integration of AI and chatbots into consulting entails risks. It should be borne in mind that tasks and competences handed over to AI will sooner or later be ‘unleashed’ by humans. A potential danger here is the loss of basic human skills such as thinking and feeling.

Dependence on technology can lead to people questioning less critically and unlearning important thought processes. This can be illustrated by the example of automated navigation: Although we are perfecting the use of a navigation app and training our digital skills, both spatial thinking (e.g. orientation in a city) and the connection to the local context (e.g. to the city and to the people in the city in which we are currently located) can be lost.

Dependence on technology can also cause people to unlearn important sensory processes. This can be illustrated by the example of excessive use of social media (e.g. Youtube, Instagram, LinkedIn, Tiktok, ...). Our click behavior is optimized in the sense of the app providers, so that they can place as much advertising as possible. To this end, our feeling – e.g. the feeling of having enough – must be eliminated as far as possible. Various industries (e.g. food, electronics, fashion and even BigTech) use AI algorithms specifically to suggest that we should never have enough of something. If we go a little further, it can be said that this is also the basis for the social (e.g. pension system) and economic (e.g. economic growth) system in which we live – with all the advantages and disadvantages that come with it!

Ethical concerns about the use of AI algorithms in consulting relate in particular to the aforementioned lack of transparency of AI systems, but also to data protection and the potential distortions caused by developers' values. These developments raise the question of how the use of AI can be designed in such a way that it complements people wherever possible, where it is also in their sense (and not only in the sense of the app developers). This can be the case, for example, with the processing of large amounts of data. Professional Informatics[1]is a promising example in Austria.

Finally, it should be noted once again that the use of AI in consulting must be carefully planned. It can support processes, but not fully adopt them.

references

Gloor, P., & Schreiber, M. (2023). AI in psychology — Is man a machine? Springer.

Herzog, W. (2022). Human Images in Psychology. In M. Zichy (Ed.), Handbook of Human Images. Springer VS. https://doi.org/10.1007/978-3-658-32138-3_20-1

Kuhl, J. (2001). Motivation and personality. Hogrefe.

Kuhl, J. (2018). Individual differences in self-regulation. In J. Heckhausen & H. Heckhausen (Eds.), Motivation and action (pp. 529-577). Springer.

Schreiber, M. (ed.). (2022). Narrative approaches in counselling and coaching. The Model of Personality and Identity Construction (MPI) in Practice. Springer Wiesbaden. https://doi.org/10.1007/978-3-658-37951-3

About the author:

Prof. Dr. Marc Schreiber is a psychologist, career consultant and professor of career and personality psychology at the IAP Institute for Applied Psychology at the ZHAW Zurich University of Applied Sciences. You can find more information on the IAP homepage: https://www.zhaw.ch/en/ueber-uss/person/scri/

[1] https://www.ams.at/jobseekers/training/professional information/professional information/professional infomat#wien

About this blog:

This blog is based on a workshop held by the author at the Euroguidance symposium 2024.